Safe and Assured Autonomy

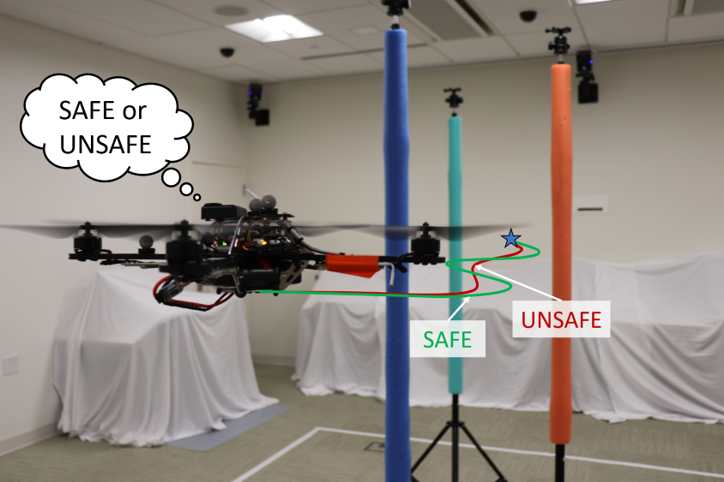

Autonomous systems can be subject to uncertainties due to external disturbances or due to the use of internal learning-based components. Since these systems are mainly used in safety-critical settings, it is important to assess the safety of the system before and during its deployment. Our research uses reachability analysis and neural network verification techniques to provide safety-critical autonomous systems with such capabilities.

Relevant Publications:

- N. Rober, S. M. Katz, C. Sidrane, E. Yel, M. Everett, M. J. Kochenderfer, and J. P. How. “Backward reachability analysis of neural feedback loops: Techniques for linear and nonlinear systems”, IEEE Open Journal of Control Systems, 2023 (Early Access) PDF

- E. Yel, T. Carpenter, C. di Franco, R. Ivanov, Y. Kantaros, I. Lee, J. Weimer, N. Bezzo, ”Assured Run-time Monitoring and Planning: Towards Verification of Deep Neural Networks for Safe Autonomous Operations”, Robotics and Automation Magazine, Special Issue on Deep Learning and Machine Learning in Robotics, 2020 PDF